Codex vs Claude Code: Comparing OpenAI and Anthropic AI Pair-Programming Tools

“Ship it, break it, blog it.” — me, probably

Why I’m pitting two bots against each other

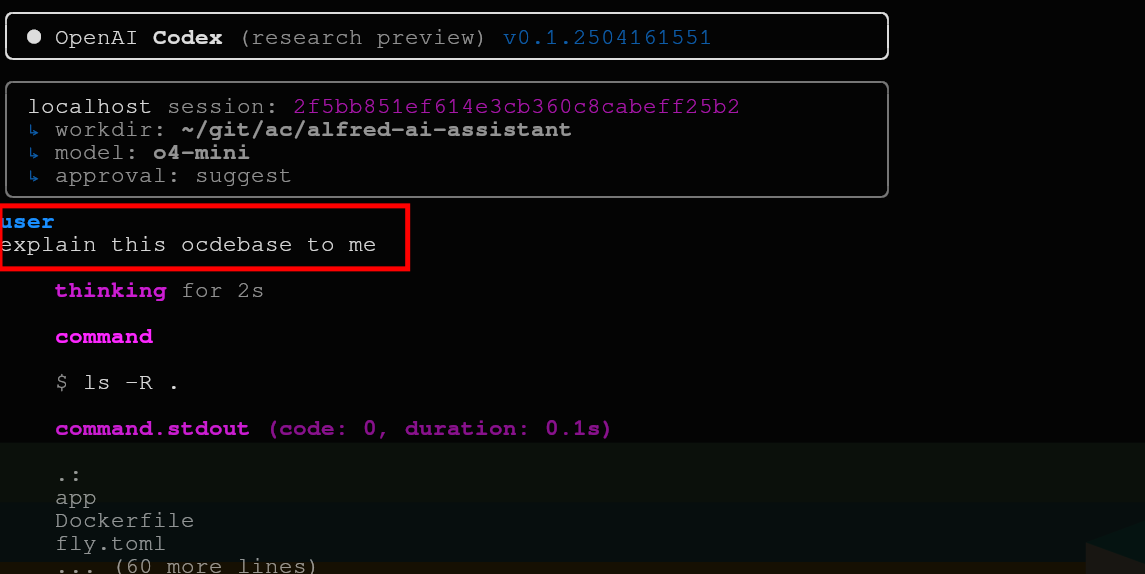

Codex (fresh‑minted yesterday, 2025‑04‑16) is OpenAI’s new open‑source code‑agent CLI, designed to work exclusively with OpenAI models. Its direct competitor is Anthropic's Claude Code, a more established, polished, but closed-source rival which utilizes Anthropic's models (available via their API, AWS Bedrock, or Google Vertex AI). For this comparison, I used the default settings: Codex ran on OpenAI's o4-mini, while Claude Code used Anthropic's claude-3-7-sonnet-20250219. While both tools allow selecting different models *within their respective ecosystems* (e.g., switching Codex to gpt-4o or Claude Code to a Haiku variant), they don't cross platforms.

Some friends have been asking me about my experience with these AI pair programming tools, so I dropped both into my

alfred‑ai‑assistant project (a Go Telegram bot that acts as personal assistant using the OpenAI Assistants API) and asked them to:

- Read the repo.

- Pitch three improvement tickets.

- Write the ticket files.

- Implement one of the tickets and let Google Gemini 2.5 Pro review the pull‑requests (that’ll be Part 2).

I timed everything and checked the API bill so you don’t have to. This post details the Codex vs Claude comparison for these initial tasks, running on their respective default models at the time of writing.

(For another example of using AI in development, check out my Multi-AI Workflow post where I used Gemini and Claude together.)

Fast TL;DR — Numbers and Vibes

If you just want the scoreboard, here it is. But numbers alone don’t tell the whole story of these AI developer tools, so I sprinkled in a gut‑feel verdict on each row.

| Bot | Analysis time | Ticket time | API cost | Overall vibe |

|---|---|---|---|---|

| Codex | ~30 s | 9 s | $0.28 | Strong dev that you can trust |

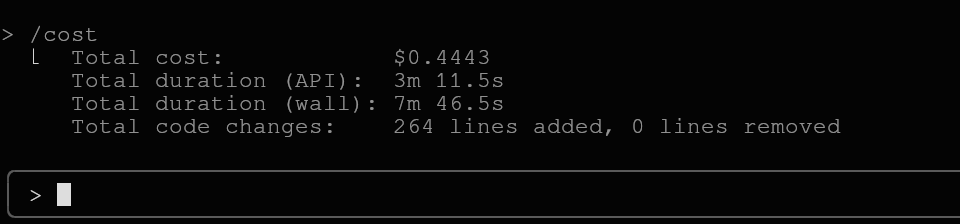

| Claude Code | ~50 s | 12 s | $0.4443 | Seasoned senior dev who invoices accordingly |

Bottom line: 🤓 Codex already behaves like a strong dev you can trust—solid suggestions, quick turnaround, less cost per session. 🤖 Claude layers on that extra senior‑engineer polish and slick UX for an extra cost per run, a premium worth paying if you need the deeper guidance from your AI code assistant.

Installing the contenders

You can install both AI pair programming CLIs via npm:

# Codex (Check official repo/docs for latest install method)

npm install -g @openai/codex

# Claude Code (Check official repo/docs for latest install method)

npm install -g @anthropic-ai/claude-code

Both CLIs need an API key. Codex accepts a .env with OPENAI_API_KEY; Claude Code stashes creds in ~/.claude.json after an onboarding wizard.

Round 1: Testing OpenAI Codex CLI

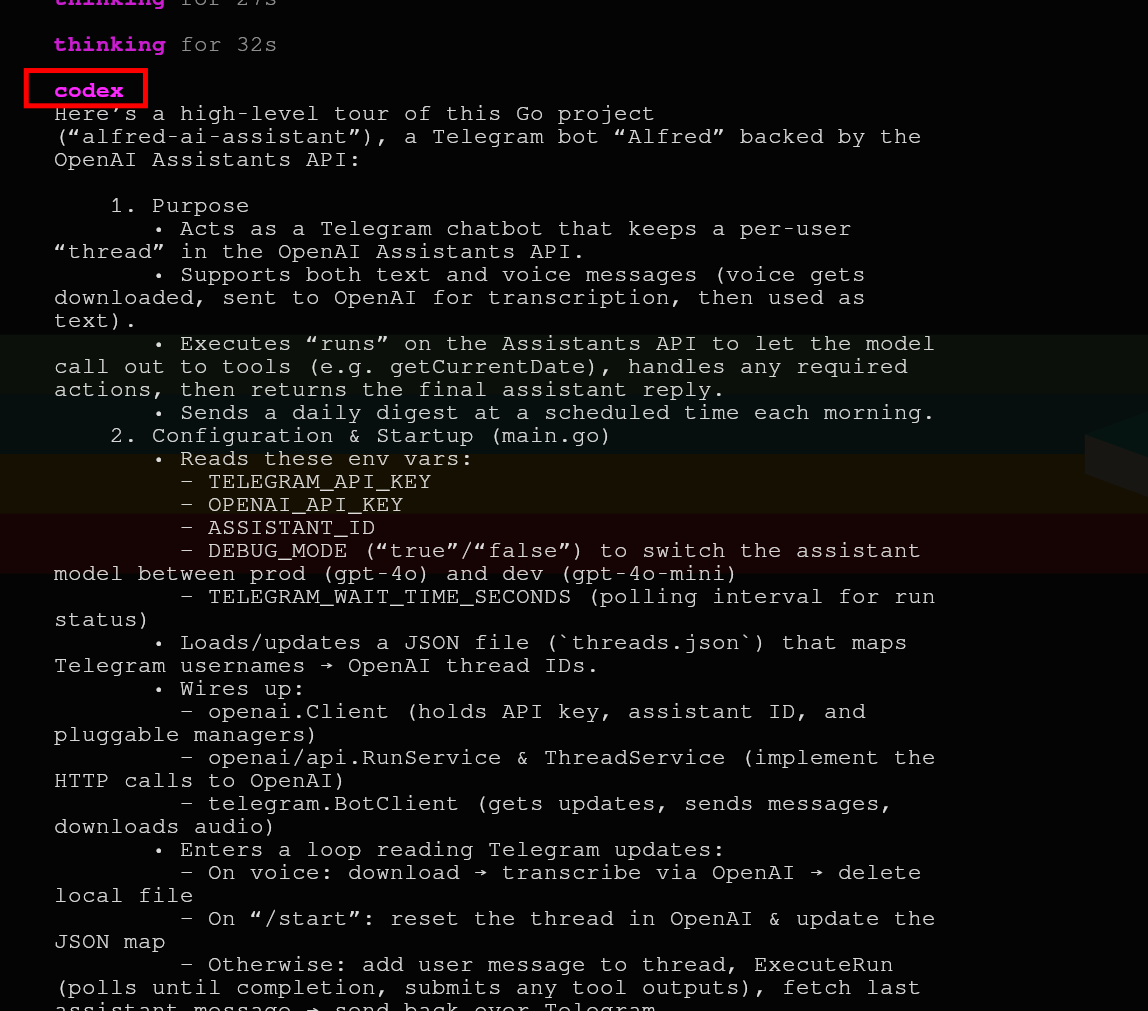

I started with the OpenAI Codex CLI. It poked around the repo (ls, head, etc.) and in ~30 s dumped a 9‑point architectural recap. Snapshot below is truncated, full text lived in the terminal.

The analysis was long‑winded but accurate. I caught myself nodding along while scrolling.

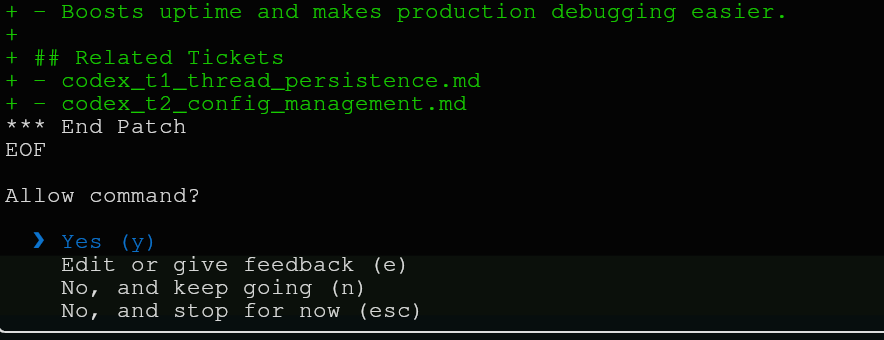

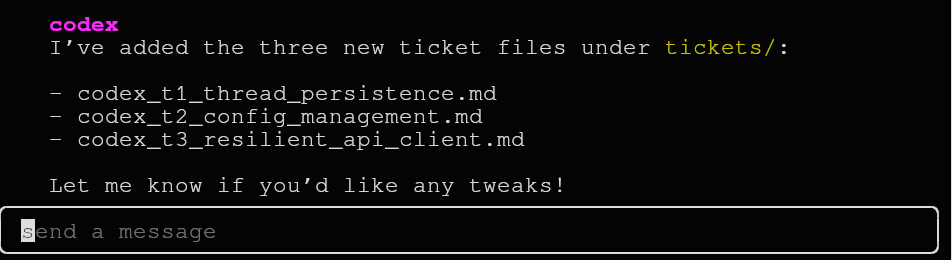

Asking for improvements using Codex

if you had to suggest 3 main tickets to improve this codebase, what would you suggest? and why?

Nine seconds later Codex fired back three tickets that lined up with a couple of my mental TODO list. Not bad for an AI code assistant.

- Thread Persistence & Lifecycle — ditch JSON and embrace an embedded DB.

- Centralized Config & Secrets Management — typed

Config, validation, goodbye env‑var spaghetti. - Resilient API Client & Structured Error‑Handling — retries and structured logs instead of

panic.

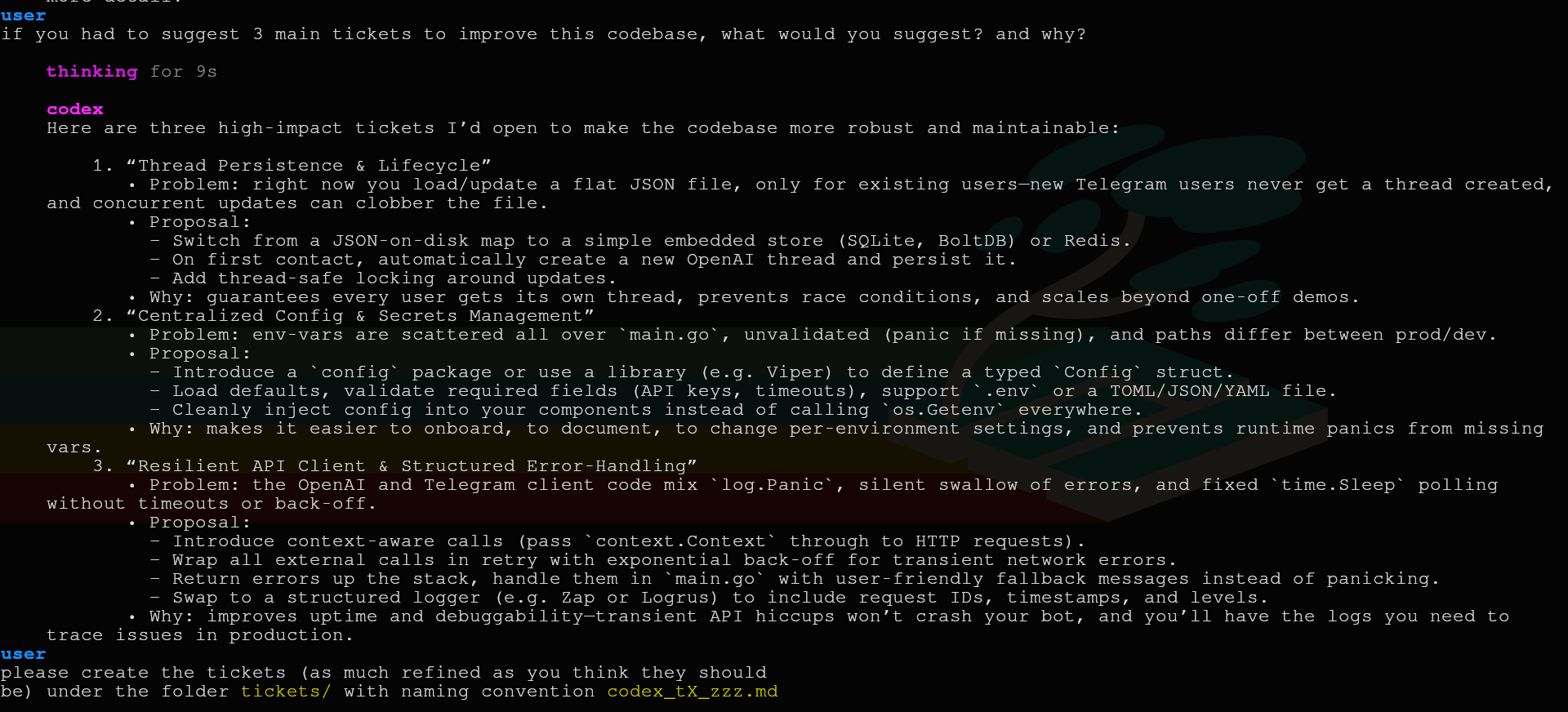

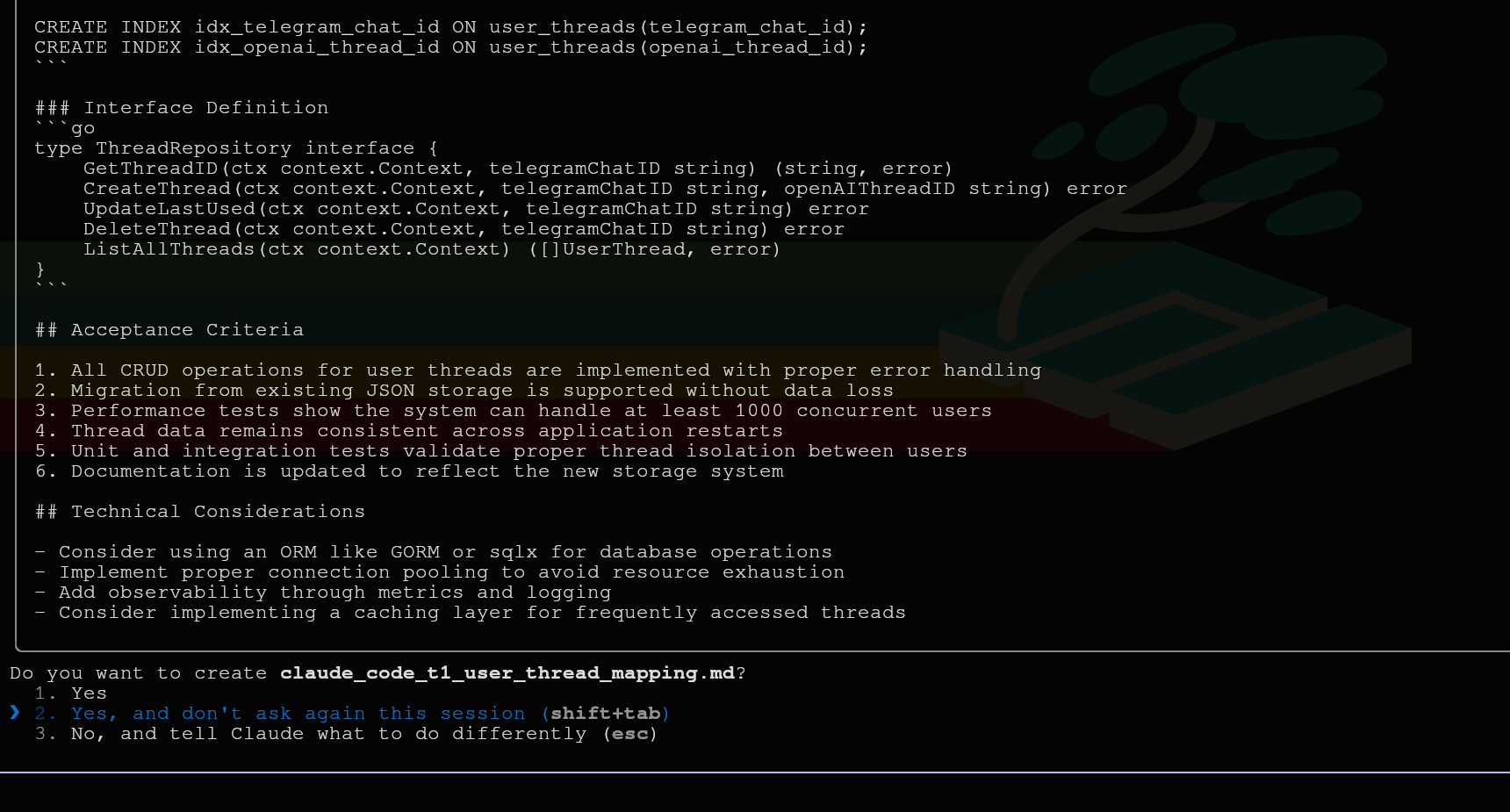

When asked to turn ideas into files, Codex turned into that colleague who needs you to hit y on every confirmation dialog.

Tip: pass --full-auto if you trust it, but I like fingers‑on‑the‑kill‑switch.

Example ticket excerpt (View the full file):

# Thread Persistence & Lifecycle Improvements

## Context

Currently the bot keeps its mapping of Telegram users to OpenAI thread IDs in a flat JSON file (`threads.json`). …

Codex bill

OpenAI usage page: $0.28.

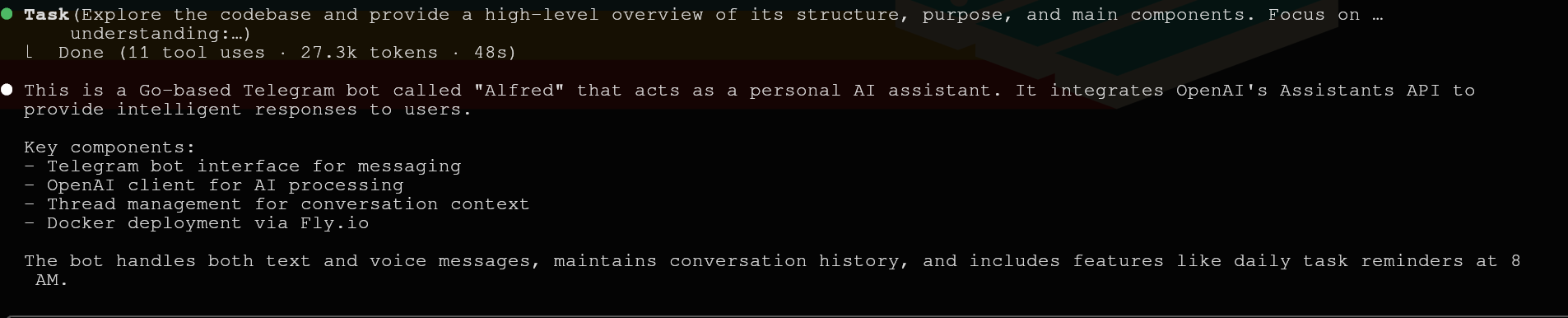

Round 2: Evaluating Anthropic Claude Code CLI

I stashed Codex tickets elsewhere so both bots started clean, launched the Anthropic Claude Code CLI and pasted the same typo‑laden prompt (fair test!). Claude took ~50s slower, but that's okay.

The explanation was concise—almost too concise—but it nailed the architecture in half a screen.

Improvement tickets from Claude Code

Its three picks:

- Implement robust user‑thread mapping with a real database.

- Enhance error handling and recovery — remove

log.Panic, add retries & better messages. - Redesign OpenAI function‑tool system for plugin‑style extensibility.

The overlap with Codex was interesting: same first two tickets, but Claude tossed in a bolder refactor (#3) that I’d been procrastinating.

File creation was much smoother: one select the option to approve all similar commands and the bot silently did its thing. This is a nice UX touch for an AI developer tool.

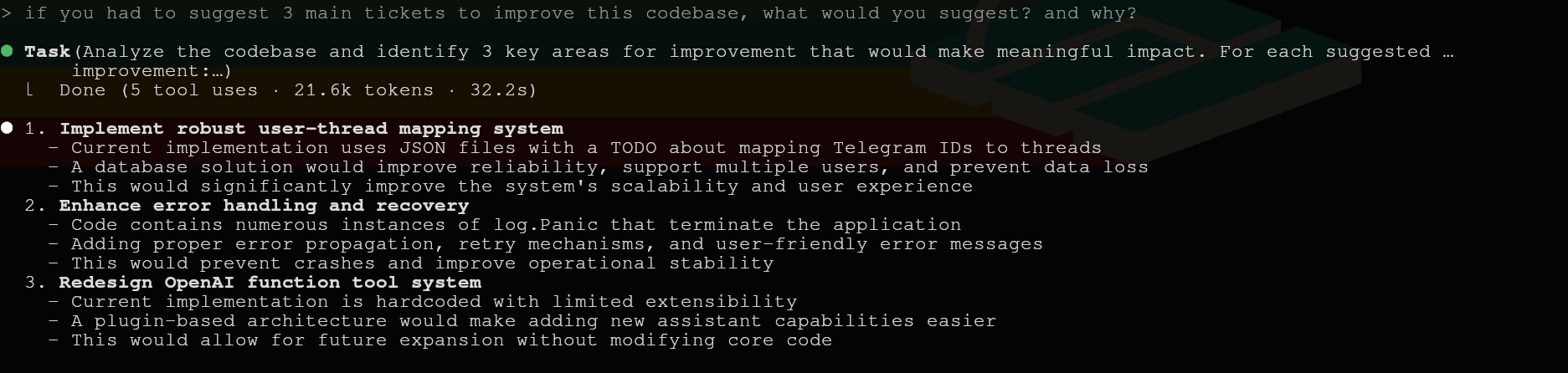

Here's an excerpt from the refactor ticket Claude Code suggested (View the full file):

# Redesign OpenAI Function Tool System for Extensibility

## Description

Refactor the current function tool implementation to create a plugin-based architecture that enables easy addition of new assistant capabilities without modifying core code.

## Current Implementation

The current implementation in `generateToolOutputs` is hardcoded with limited extensibility. Adding new functions requires modifying the core implementation, making the system difficult to extend and maintain.

## Proposed Solution

1. Create a plugin-based architecture for registering AI assistant functions

2. Implement a registry system for dynamically discovering and loading functions

3. Develop proper function parameter validation and error handling

4. Add a configuration system for enabling/disabling specific functions

5. Create standardized interfaces for function implementations

6. Include comprehensive documentation and examples for function developers

Claude Code bill

The built‑in /cost readout is glorious: spend, duration, lines changed—perfect for keeping track of costs when using these AI tools.

Battle notes & take‑aways: Codex vs Claude Comparison

- Quality vs cost — Claude’s tickets felt like staff‑eng blueprints; Codex’s looked like solid mid‑level JIRA stories. A key difference in this Codex vs Claude comparison.

- UX — Claude’s one‑click trust toggle saves sanity. Codex’s y/n loop gets old fast.

- Built‑in /cost tracking — huge plus for Claude when you’re watching pennies.

- Speed — Codex wins the stopwatch, but the gap is seconds, not minutes.

- Open‑source momentum — Codex will probably level‑up quickly once GitHub starts filing issues and PRs.

Conclusion — Which AI Pair Programming Bot Lives in My Terminal?

After a day of back‑and‑forth comparing these AI developer tools, I’m keeping both in my toolkit:

- Codex (OpenAI Codex CLI) for rapid‑fire tasks where I value speed and cost over polish—think “generate a quick migration” or “draft a README”.

- Claude Code (Anthropic Claude Code) when I need senior‑level reasoning, richer diffs, or when the project’s budget can absorb the extra cost per session for a premium AI code assistant.

The real win is optionality: I can start with Codex and, if it stalls, summon Claude for a second opinion without blowing the monthly AI budget. Effective AI pair programming often involves knowing which tool fits the task.

Next up I’ll let both bots implement one of their own tickets and have Gemini 2.5 Pro conduct the code review. Stay tuned for Part 2 of this Codex vs Claude comparison, featuring AI bot coding fireworks.

Time & money recap

- Codex: 39s wall time, $0.28

- Claude Code: 62s wall time, $0.4443

Cheap tools, expensive curiosity.

But hey, at least my terminal now has two new colleagues—one reliable dev, and one seasoned pro who's worth every penny.

Built it, broke it, blogged it. Catch you in Part 2 for more AI-powered fireworks (and hopefully fewer confirmation prompts).